🪲 Pooptimus Prime: The World's First Dung Beetle Biorobot

Executive Summary

- Pioneered creation of dung beetle biorobot; adapted sophisticated neural model into the robot's programming.

- Secured exclusive research grant to travel to South Africa to test the robot alongside real dung beetles.

- Achieved average grade of 82% and acknowledged as one of the ~20 "outstanding" projects in the year.

The Beetle Hunter Diaries

I know what you're thinking: "Finally, a page filled with adorable pictures of dung beetles!" Alas, I am but a humble software engineer, not exactly the next David Attenborough. But worry not, this page has something equally captivating –- the world's first dung beetle biorobot! Now that I have your undivided attention, let me take you on the adventure you came here for -- follow me into the dangerously glamorous world of dung beetle feeding behaviour.

First, a thought experiment. Close your eyes, take a deep breath, and imagine you're a dung beetle. (This may be an easier leap for some of you than for others.) Now, dung-beetle-you is a simple creature, with simple needs. Cruising the rolling South African grasslands, hitching a ride on the summer breeze, you catch the wafting scent of a fresh juicy dung pile. Tasty stuff! Descending quickly, you follow your well-honed instincts and quickly get to work crafting the perfect dung ball. Fast forward to putting the finishing touches on your magnum opus, and with a final pat, you step back to bask in the glory of your creation. This is the Sistine Chapel of dung balls.

But, uh oh, what's this? You're not the only one who's noticed your fine craftsmanship. A nefarious rival has been lurking from the shadows, eyeing up your spherical masterpiece with envy. He's hungry, he's lazy, and he's clearly got no intention of making his own dinner. If you want to save your food baby, you've gotta act fast. Decision time! What do you do?

If you answered "get the hell out of there", you're thinking like a dung beetle. In fact, you might have been one in a past life. So you probably already know that the quickest way to get out of there is to roll your ball in a straight line away from the dung heap. But have you ever tried walking in a straight line with your eyes closed? Not as simple as it seems, right? You'll likely end up veering off to the side, or even walking in circles. So how do dung beetles do it? Well, the secret is... they don't close their eyes! Don't tell me you didn't see that coming. And some very clever scientists (whom I had the privilege of learning from in South Africa) have shown pretty conclusively that dung beetles use what they see in the sky to keep their fabulous fecal formations rolling straight into the sunset. The two most important visual cues are:

- The intensity-gradient cue.

- This comes from the position of celestial objects such as the sun, moon, and even the stars!

- The polarisation cue.

- This comes from the polarisation pattern of the sky (explained more below).

Say Hello to My Little Friend

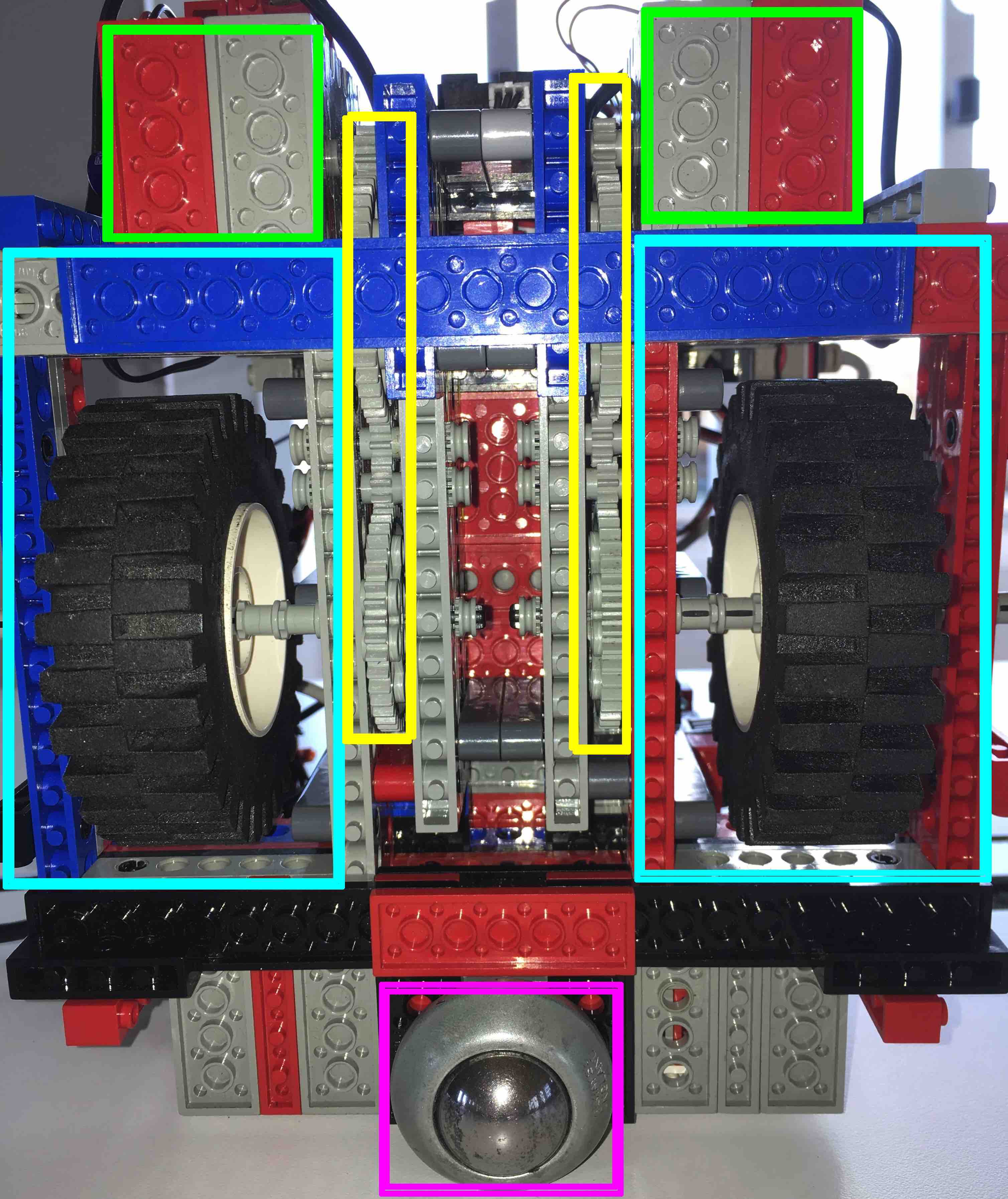

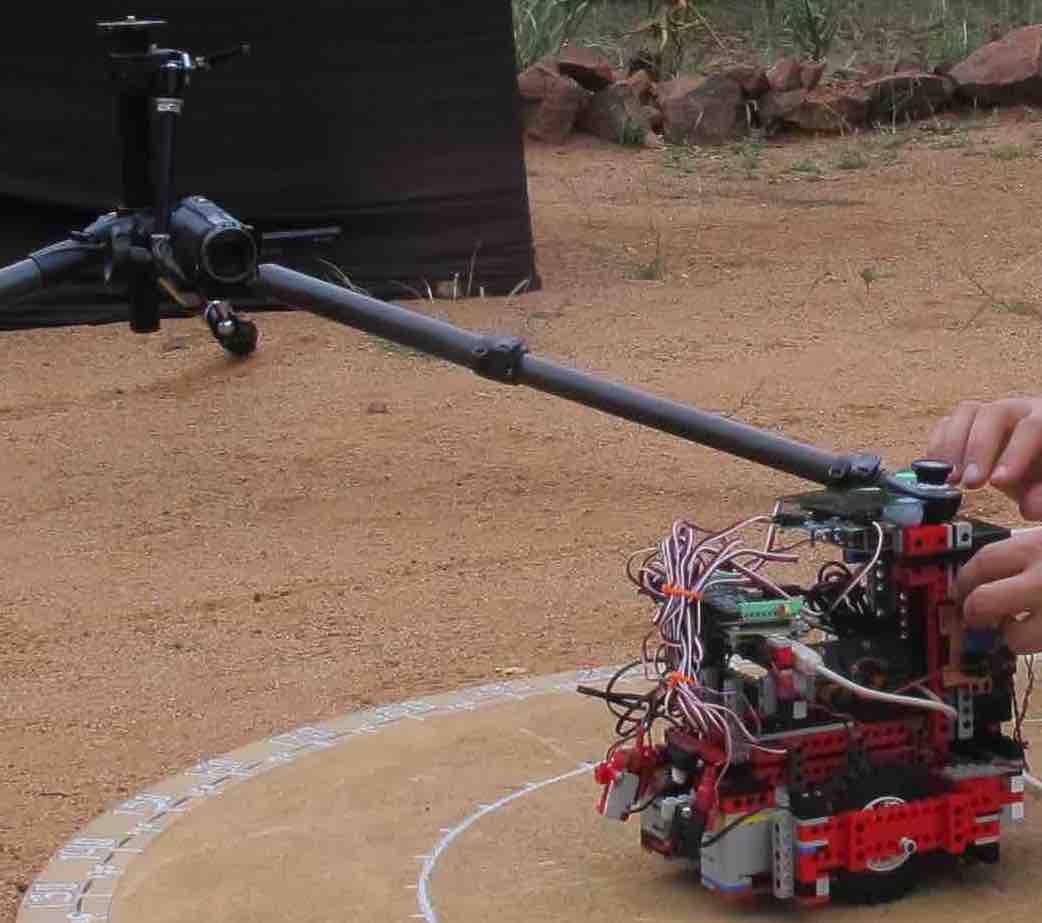

So why build a dung beetle robot? Well, let me tell you. Young Pooptimus Prime isn't just any old robot, he's a biorobot, meaning he's a model of the real-deal biological system, and is used to test hypotheses about how dung beetles think and behave, all the way down to their neural circuitry! Sporting two independently controlled wheels and a ball-bearing for balance, Pooptimus is a lean, mean, dung-ball-rolling machine, and can go straight, turn on the spot, or make a forward curve -- all basic dung beetle moves. But if you're picturing some sophisticated, state-of-the-art equipment, think again. Pooptimus is a simple creature, built robustly to withstand the harsh and accident-prone life of an experimental biorobot, but cheaply, from nothing but LEGO, some electronics, and the special ingredient that brought him to life... ❤️ ...that's right, programming!

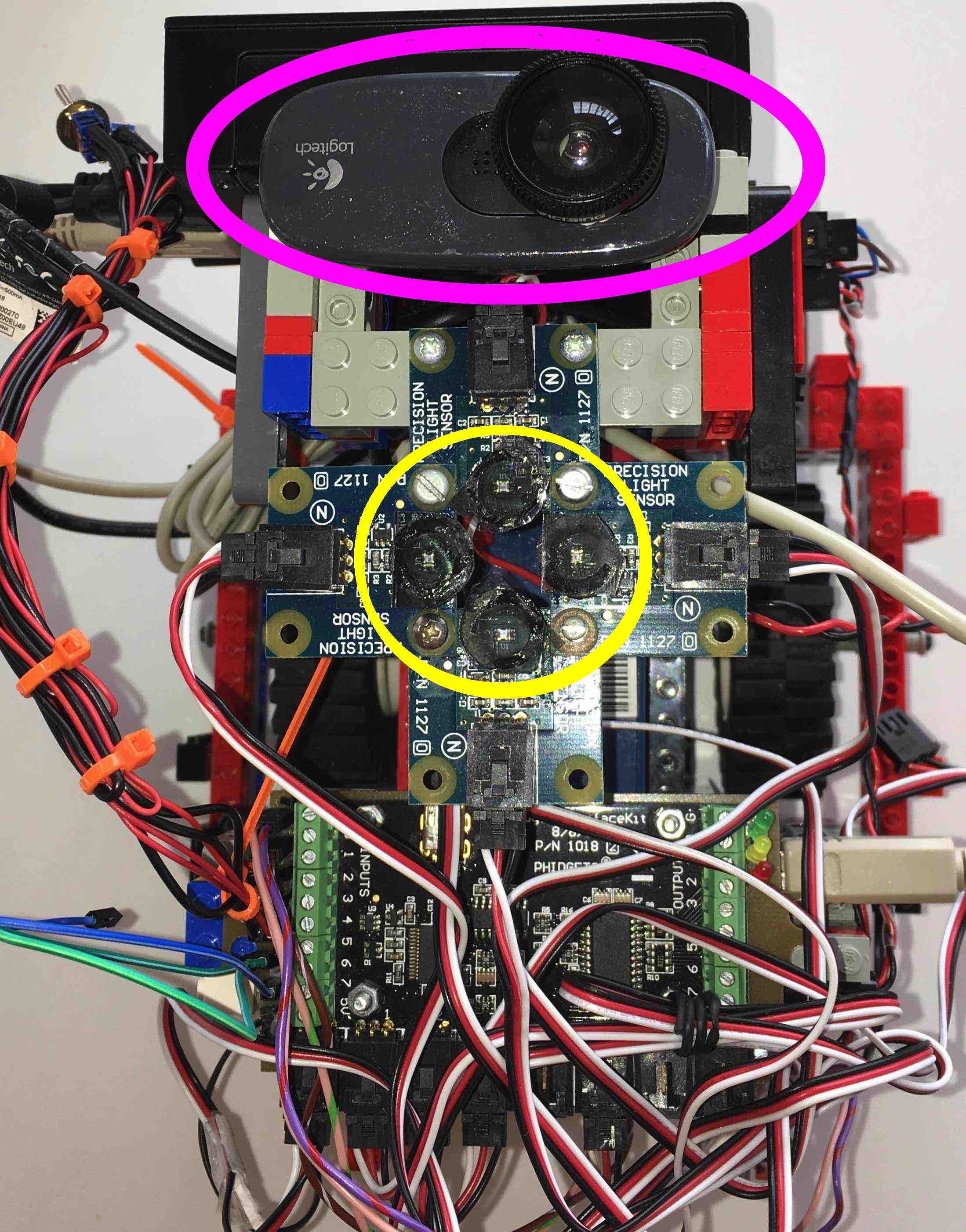

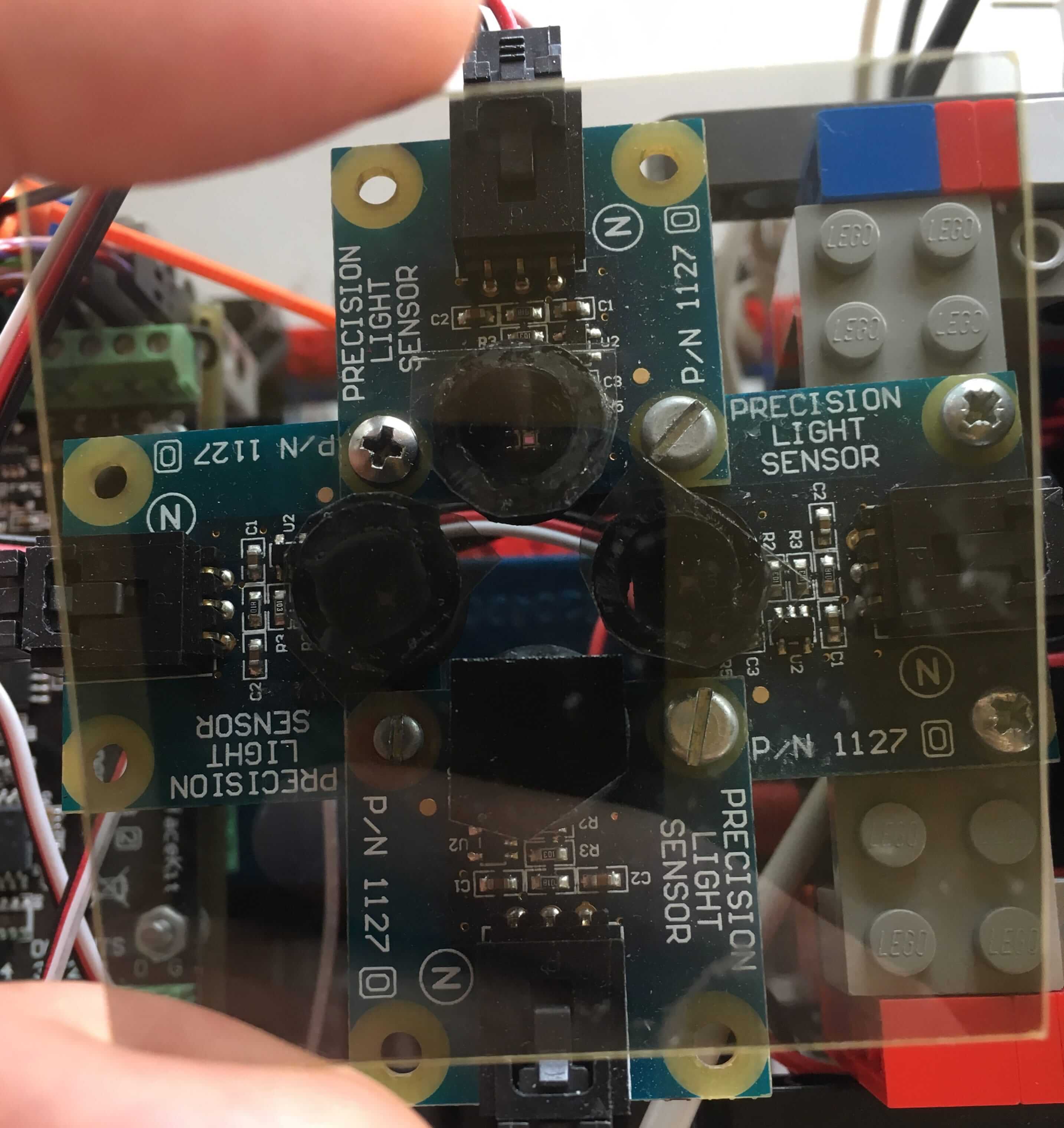

But Pooptimus needs more than just movement to be a true dung beetle. He has to see like one, too. That's why he has a built-in digital camera set to gaze up into the heavens, with a fish-eye lens allowing him to perceive light from across a large chunk of the sky simultaneously. He's also equipped with a custom-built array of four light sensors, each filtered at a different angle of polarisation. Together, these enable him to calculate the angle of polarisation of the light coming from the sky, just like his dung beetle brethren.

By the way, if all this polarisation nonesense has you scratching your head, here's the 101. Polarised light is light that oscillates in a specific direction. This direction is different depending on where you look in the sky, and the pattern across the sky changes based on the sun's position. Nevertheless, if you're looking straight up from a fixed ground position, the angle of polarisation remains stable enough for navigation. Like the North Star for humans, polarisation helps lost dung beetle souls find their path through the wilderness. But wait! Before you run outside and peer at the sky in vain, you should know that humans can't tell the difference between polarised and unpolarised light. But guess who can? Who's a good robot, who's a good robot? That's right, Pooptimus can!

Check out the light sensors in the figure. A polarising filter covers the entire setup, permitting only the light polarised in a specific direction to pass through. As each sensor has its own mini-filter set at a different angle, varying light quantities reach each one. These four data points together empower Pooptimus to calculate the sky's polarisation angle at any moment. And with that, he's ready to roll!

Inside the Beetle Brain: It's Not All Dung and Games

As beautiful as Pooptimus is on the outside, we all know it's what's on the inside that counts. You see, one doesn't build a dung beetle biorobot simply for shits and giggles (pun intended). The real goal was to venture into the enigmatic labyrinth that is the dung beetle brain. So let's take a peek inside, shall we?

The part of the brain that's responsible for navigation in insects is called the central complex (CX). This is a GPS-like structure that acts as a sort of 'mission control', reporting on the animal's movement through the world. It's an intricate mesh of interlinked neuropils (think neural spaghetti) that receives inputs from the eyes and other sensory organs, and sends out instructions to the beetle's little legs. This ancient and essential system has survived millions of years and is remarkably similar across multiple insect species, proving that evolution agrees with the adage "if it ain't broke, don't fix it".

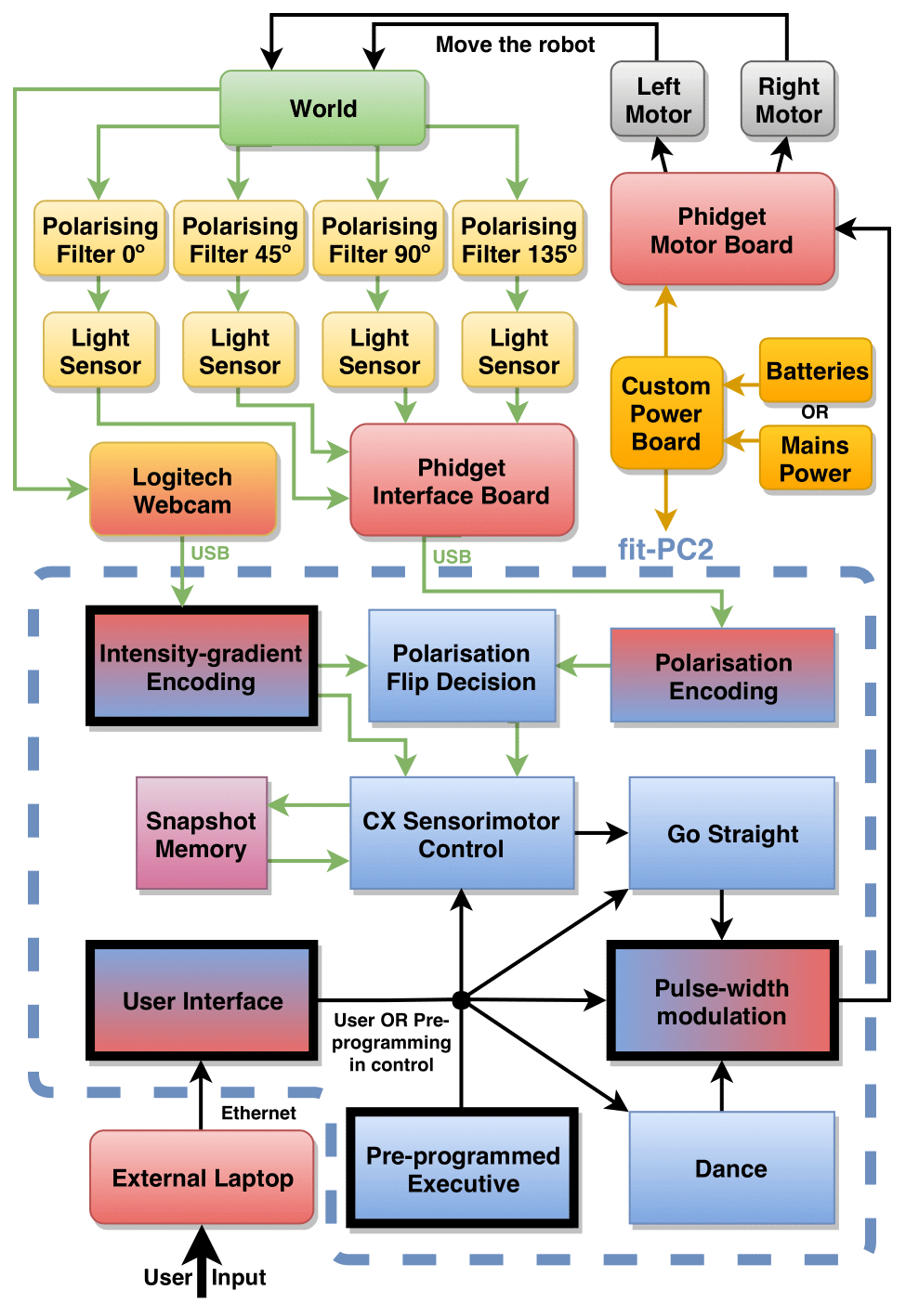

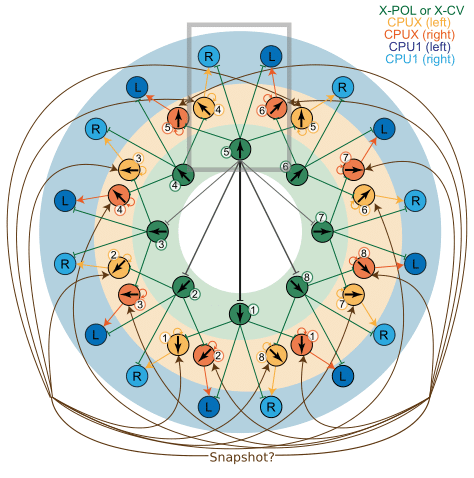

In the figure here you can see my adaptation of a CX model of path integration in bees to straight-line navigation in dung beetles. First, let's visit the neighborhood of green neurons. These band together to form a ring that works just like a compass. They keep a pulse on the beetle's current heading, making sure our little navigator knows which direction its facing at the moment.

Next, let's take a walk down memory lane, where the yellow and orange neurons reside. Think of these as the beetle's trusty old scribes, remembering a 'snapshot' of the sky's configuration when the beetle begins its journey. They keep this valuable snapshot safe, as it records the direction our intrepid dung beetle should be heading if it wants to get away from those nasty dung-ball-stealing rivals.

(The snapshot is actually taken while the beetle does a little dance on top of its ball, which is probably the cutest thing you'll ever see. Unfortunately I didn't have time to integrate this into the CX model, but I manually programmed the robot to do a little dance before each roll, because it was just too adorable to leave out.)

Our final stop is the blue neuron district. Here, we meet the unsung heroes, the diligent workers who keep the wheels, or legs, turning. They function as the motor behind the beetle's movements, helping it course-correct if it strays off the beaten path. By comparing what the green neurons are saying (where we're currently heading) to what the yellow and orange neurons are saying (where we should be heading), they can calculate the direction the beetle should turn in order to get back on track. Imagine them as a team of highly certified life-coaches (if there is such a thing), constantly providing instructions on which way to turn to ensure our dung beetle stays on the straight and narrow.

Visual Cue Integration: The Sky's the Limit

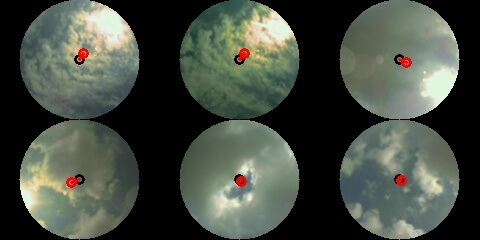

Once upon a time, scientists thought that dung beetles had two different visual cues related to brightness: use the sunniest point in the sky as an anchor, or just use the general glow of the horizon. I even built a fancy model to mimic this idea, but it was about as useful as a chocolate teapot! Then, my genius supervisor had a light bulb moment –- what if the beetles use them both together, like a one-stop-shop for navigation? I jumped on this concept and whisked the two factors into one integrated "centroid vector" (CV), also called the intensity-gradient cue. Don't believe me? Just check out these robot's-eye views of South African skies. The black circles are the center of the visual field, and the small red lines are the CVs, calculated by integrating the brightness across the whole sky. It worked like a charm!

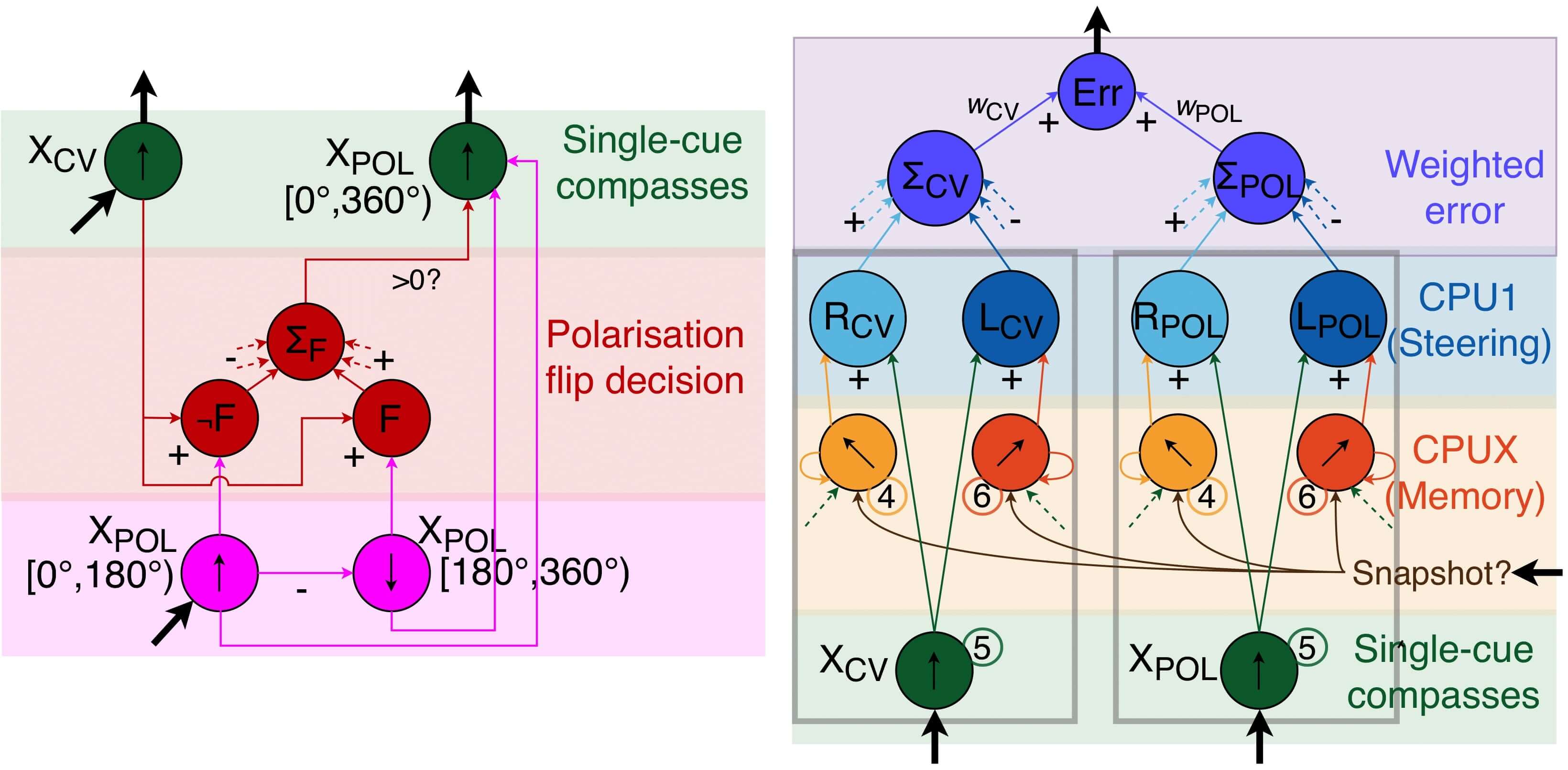

Now, let's chew over the polarisation cue. I've already shown off the robot's fancy polarisation sensor, but an issue neglected in the original CX model was that polarisation only covers 180 of the 360 degress you might want to roll in. Like using a half-eaten compass, you could be facing one way or its exact opposite and get the same polarisation reading. So how does the robot -– or beetle, for that matter –- know its nose from its tail? To tackle this, I masterminded a neurologically-plausible mechanism that can flip the polarisation angle if the compass is upside down. We don't know for sure yet, but if the beetle's do this too, they're at least as smart as me!

So we've got these two navigational aces up our sleeves, intensity-gradient and polarisation, but there's only one road to roll our dung ball down. What happens when the cues conflict? It's like Buridan's Ass, the donkey placed exactly halfway between two equally delicious haystacks. We can't stall forever or we'll starve to death, so I dived into the science to clarify this donkey's dilemma. It was about as clear as mud at first, but in the end I clarified the two strategies influencing cue choice.

- Weighting of the whole cue.

- Let's admit it, we all play favorites. If you're choosing between a vending machine sandwich and your mother's homemade soup, it's no contest. That's how it goes with the intensity-gradient cue -– it's the trusted soup over the dubious sandwich. I reckon this preference is innate, a product of eons of evolution, and varying for different kinds of beetles, e.g. day-rolling beetles favour the intensity-gradient cue vs night-rolling beetles favour the polarisation cue.

- Weighting of each measurement.

- The importance of each cue for navigation can shift faster than a politician backtracking on a campaign promise. One minute you're cruising along, heeding the polarisation cue, and –- kaboom! -– a big-ass cloud gatecrashes the party and muddles the pattern. My theory is that our beetle friends are perpetually tweaking the weights of each cue based on the quality of the data received. Even better, I already had a metric to measure the quality of each measurement: the length of the line for the CV, and the degree of polarisation calculated from the polarisation sensor.

Finally we come to the most beautiful and satisfying part of the whole project. When I went to implement these two cue combination strategies, I found that they both fit perfectly into the CX model. Can you believe it? I won't bore you with the math, but it was on par with solving one face of a Rubik's cube and finding the rest already solved for you. Isn't it just the best when a plan comes together? I was as happy as a dung beetle in a dung heap.

Rolling in the Deep: A South African Saga

Ah, the highlight of my university experience. Fifth year hit its crescendo when I got the golden ticket –- an invite to South Africa to field test the robot under the very same magnificant skies that our tiny beetle friends call home. Even better, my proposal was accepted for an exclusive research grant to cover the cost of the whole trip!

I was only there for 10 days, but I can definitely say that biologists know how to have a good time! I was treated to a whirlwind tour of local wildlife, coming nose-to-trunk with a hungry baby elephant, and an adrenaline-pumping drag race with an ostrich that thought it could outrun a particular dung-beetle-loving researcher (spoiler: it couldn't). And let's not even get started on the stars. Light pollution-free, they're a celestial canvas that left me breathless. I can understand why the dung beetles love it there so much! Of course, the trip would've been incomplete without a face-to-face rendezvous with the dung beetle celebrities themselves. Fun fact: If you dare a dung beetle to an uphill roll, the stubborn critter just keeps going! It's like they're on a treadmill!

Oh and the robot worked! There was a couple of unforseen weather issues but we got some good data. The results showed that the robot was able to go just as straight as the beetles under the same skies, and that the weighting of the cues was adapting to the situation. Thus, my adapted CX model was validated, and we can be confident that it's a good representation of what's really going on under the beetle's bonnet. Brains are complicated, but one small step for science is one big leap for beetle-kind!

Never before has a robot been used to study dung beetle navigation, and I'm proud to have been the first. A massive thanks to everyone who made this journey possible, it was an experience I'll never forget!